We’re No Longer Dealing with Just Software…For years, we talked about artificial intelligence as if it were something purely software-based. An algorithm. A model. At most, an online service running quietly on remote servers. But in 2025, that picture no longer matches reality.AI today isn’t just code. Behind every language model, every generated image, and every fast response we get, there’s a large, physical system at work: data centers, powerful processors, cooling systems, electricity transmission lines, and an entire energy supply chain holding it all together.Put simply, AI no longer lives only on our phone or laptop screens. It exists on the ground—in power plants, in water consumption, and even in national carbon balances. That’s why the main question today is no longer “What can AI do?” The more important question is: What does this power cost the planet?This article doesn’t aim to exaggerate or scare. No emotional comparisons. No media-driven number games. Just one straightforward question: At what scale is AI Consuming Resources?

Why Comparing AI Consuming Resources in 2025: Scale of Countries, Not Just a Digital Tool to a “Country” Makes More Sense Than Comparing It to “People”

You’ve probably seen infographics or posts comparing AI’s energy or water use to “one person” or “one million people.” These comparisons might feel shocking at first, but analytically, they’re flawed. Why? Because AI doesn’t consume resources the way people or households do.

AI is a concentrated industrial activity. Its consumption looks like that of a factory, not like the electricity used by lights and refrigerators at home. When we compare an industrial system to household consumption, we’re mixing two completely different scales.

But when we compare AI’s consumption to that of an industrialized country, the picture becomes much more realistic. A country that:

- has industry

- runs a nationwide electricity grid

- uses water at an industrial scale

- and has a clearly defined carbon footprint

That’s when it becomes clear that AI is no longer a small tool. It’s operating at the same level as national infrastructure.

How the Comparison Works: What We’re Actually Measuring

To keep this comparison fair and defensible, a few basic principles were followed.

First, all AI-related figures are based on mid-range estimates, not worst-case or alarmist scenarios. The goal here isn’t to scare anyone—it’s to show the real scale of what’s happening.

Second, instead of comparing AI to population numbers, the benchmark used is a medium-sized industrialized European country. Countries like Sweden or the Netherlands are good references because their data is transparent and their infrastructure reflects modern industrial systems.

Third, when it comes to water, the focus is strictly on industrial and mains water use, not total national water withdrawal. AI, after all, is an industrial activity, not agriculture.

Electricity: When AI’s Power Use Matches That of a Country

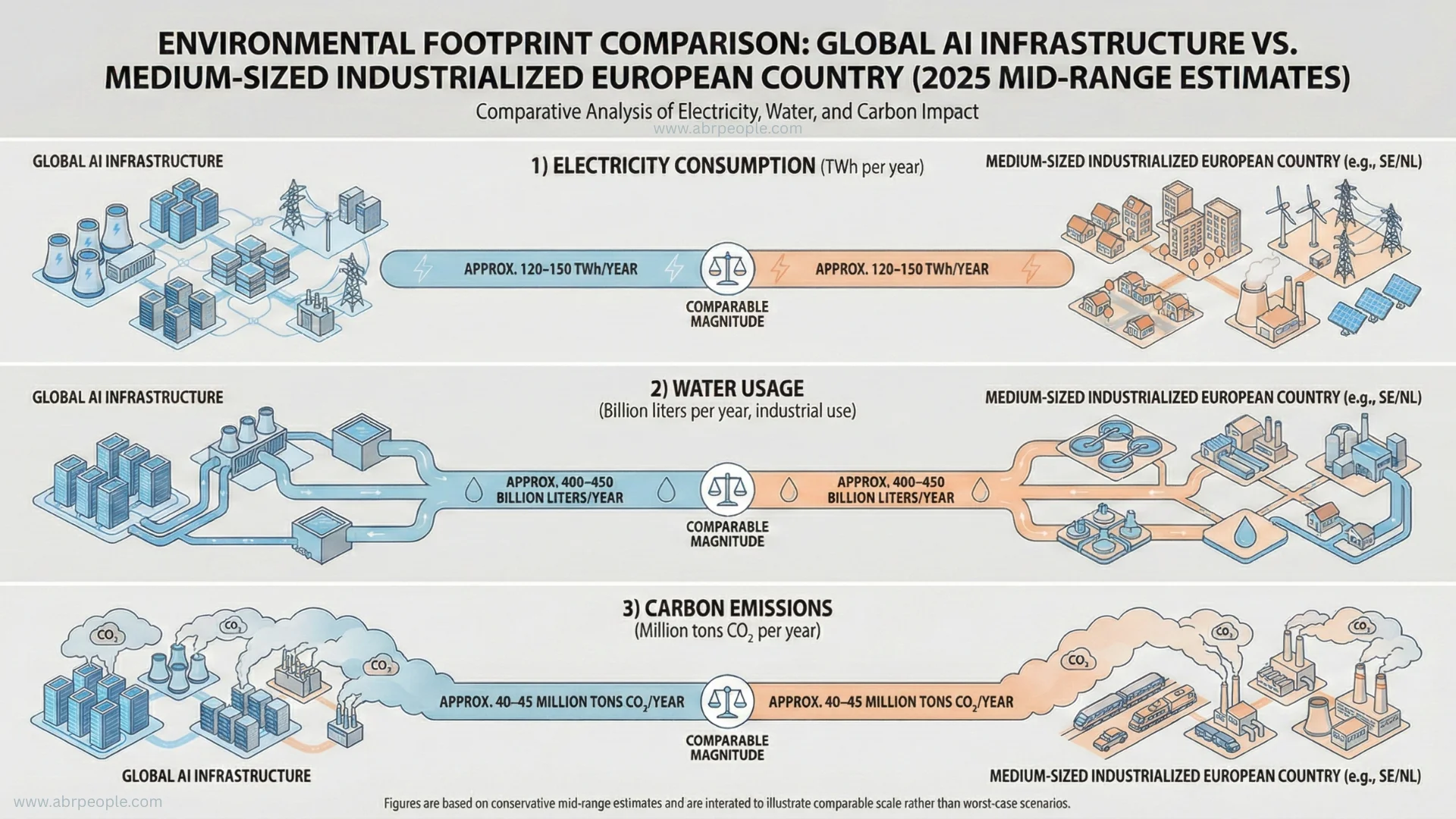

Based on scientific research and international energy reports, electricity consumption linked to global AI infrastructure in 2025 is estimated at around 120 to 150 terawatt-hours per year. This includes model training, everyday inference, data center operations, and part of the indirect energy use.

Now place that number next to the electricity consumption of an industrialized country. Sweden’s annual electricity use is about 137 TWh, and the Netherlands sits in roughly the same range. From an electricity perspective, global AI is approaching the scale of an entire country.

But the story isn’t just about the number. The pattern of consumption matters too. AI electricity use is:

- highly concentrated

- almost constant

- and dependent on very high reliability

Unlike household consumption, which goes up and down, AI data centers must run around the clock. That puts direct pressure on local power grids and turns energy from a technical issue into a strategic one.

Water: The Cost That Often Goes Unnoticed

When people talk about AI’s resource use, water usually stays in the background. But the reality is simple: without water, much of this infrastructure wouldn’t work at all. Data center cooling systems, power plants, and even hardware manufacturing all depend on water.

Conservative estimates suggest that AI-related water consumption in 2025 will be around 400 to 450 billion liters per year. When compared to the industrial water use of a European country, that number is surprisingly similar. For example, industrial mains water use in the Netherlands falls within this same range.

What makes this especially important is where that water is used. Many data centers are built in regions that:

- are already climate-sensitive

- or face increasing water stress

That’s why expanding AI infrastructure isn’t just a technological decision. It’s also an environmental and social one.

Carbon: When AI Takes on an Industrial Footprint

Every unit of electricity and water consumed comes with a carbon cost. Given today’s global energy mix and mid-range projections, AI-related carbon emissions in 2025 are estimated at around 40 to 45 million tons of CO₂ per year.

That’s roughly equivalent to the annual emissions of an industrialized country like Sweden—a country often seen as relatively advanced in environmental policy. This comparison shows that even without worst-case assumptions, AI on its own is becoming a serious player in the global carbon equation.

Part One Summary: AI’s Role Has Changed

By this point, one thing is clear:

In 2025, AI is no longer just a smart digital tool. It’s infrastructure at a national scale—infrastructure that:

- consumes electricity

- uses large amounts of water

- and produces carbon emissions

In the second part of this article, we’ll move on to harder questions:

Can efficiency slow this trend down?

What role do governments and regulation play?

And is it even possible to imagine a future where AI is both advanced and sustainable?

Can Efficiency Actually Solve the Problem of AI Consuming Resources?

The first reaction to discussions about AI’s resource consumption is usually the same:

“Models are getting more efficient. Hardware is improving. So the problem will solve itself.”

That’s partly true—but it’s not the whole story.

In recent years:

- chips have become more efficient

- models have been better optimized and compressed

- energy per operation has gone down

At the same time, something bigger has happened: demand has grown much faster than efficiency.

As AI has become cheaper, faster, and easier to use, its applications have exploded. What used to exist only in research labs is now everywhere—search engines, content creation, design, programming, education, and everyday conversations. That means lower energy use per request doesn’t automatically lead to lower total consumption.

This is exactly what economists call the Jevons Paradox:

when a technology becomes more efficient, total consumption often increases, because people use it more.

So the short answer is simple:

efficiency is necessary, but it’s not enough.

This Isn’t Just a Technology Issue. It’s a Policy Issue.

Up to this point, it might sound like a purely technical problem: better chips, lighter models, cooler data centers. But a large part of the story has nothing to do with engineering—it has to do with political and economic decisions.

There are a few key questions here:

- Where are data centers built?

- What kind of energy do they use?

- Where does cooling water come from?

- Who is responsible for the environmental impact?

These aren’t questions engineers can answer on their own. They’re decisions made at the national level—and sometimes beyond.

When a country approves large-scale data center projects to attract investment, it’s effectively deciding:

- how its electricity grid will be used

- how local water resources will be allocated

- and how much carbon enters its national balance

At that point, AI stops being just an “innovative technology.”

It becomes a governance issue.

Renewable Energy for AI Consuming : A Real Solution or a Comfortable Illusion?

It’s often said that the problem will be solved because data centers are switching to renewable energy. That sounds reassuring—but it needs a closer look.

Yes, many large tech companies:

- sign renewable power purchase agreements

- claim carbon neutrality on paper

- publish sustainability reports

But there are a few important realities.

First, renewable energy is limited. When massive data centers consume renewable electricity, they’re using power that could have replaced fossil fuels in other sectors.

Second, renewable energy still needs infrastructure: land, materials, transmission networks, and time. None of that appears overnight.

Third, many data centers are still connected to grids where the energy mix isn’t fully clean, for technical and reliability reasons.

So renewables are part of the solution—but without broader planning, they risk shifting the problem rather than solving it.

Transparency: The Missing Link in Today’s AI Ecosystem

One of the biggest problems right now is the lack of clear visibility into AI’s real resource use. Companies usually:

- don’t publish precise energy figures for individual models

- don’t report water consumption separately

- and present carbon emissions in aggregated, high-level numbers

As a result, most analyses rely on estimates and modeling.

Without transparency, regulation becomes guesswork. You can’t manage or regulate something if you don’t really know how much it consumes.

That’s why one of the most important steps toward a sustainable AI future isn’t a new chip or a better model—it’s standardized, transparent reporting.

Can We Stop AI’s Growth?

Let’s be realistic. Stopping the growth of AI isn’t possible—and it wouldn’t make sense anyway. AI increases productivity, simplifies work, and creates real value in many areas.

The real question isn’t “Should we stop AI?”

It’s:

- where does AI use genuinely create value?

- where is it driven mostly by convenience or entertainment?

- and which uses deserve lower priority when resources are limited?

Not all AI applications are equal. Medical research, energy optimization, or transportation planning are not on the same level as endless novelty image generation. When planetary resources are finite, those differences start to matter.

A Possible Future: AI as Responsible Infrastructure

If we’re realistic, the future of AI will likely sit somewhere between two extremes:

not a technological apocalypse, but not a free, costless digital dream either.

In that future:

- AI is treated as infrastructure, not just a fun tool

- its consumption is included in national energy and water planning

- regulations are built on real data

- and decisions about AI expansion resemble decisions about power plants or heavy industry

This shift in perspective might be the most important step of all. As long as AI feels “invisible,” its impacts stay invisible too.

Final Thought: AI Consuming Resources Is Our Problem to Solve

In 2025, artificial intelligence is no longer just a topic for engineers and developers. It’s a concern for economists, policymakers, urban planners, and everyday citizens.

When AI’s electricity, water, and carbon use reach the scale of a country, we can’t talk about it only with technological excitement. We have to talk about costs, priorities, and trade-offs.

The future of AI doesn’t depend only on smarter algorithms. It depends on how intelligently we manage the resources we choose to give it. And that may be the most important decision we still haven’t fully made.