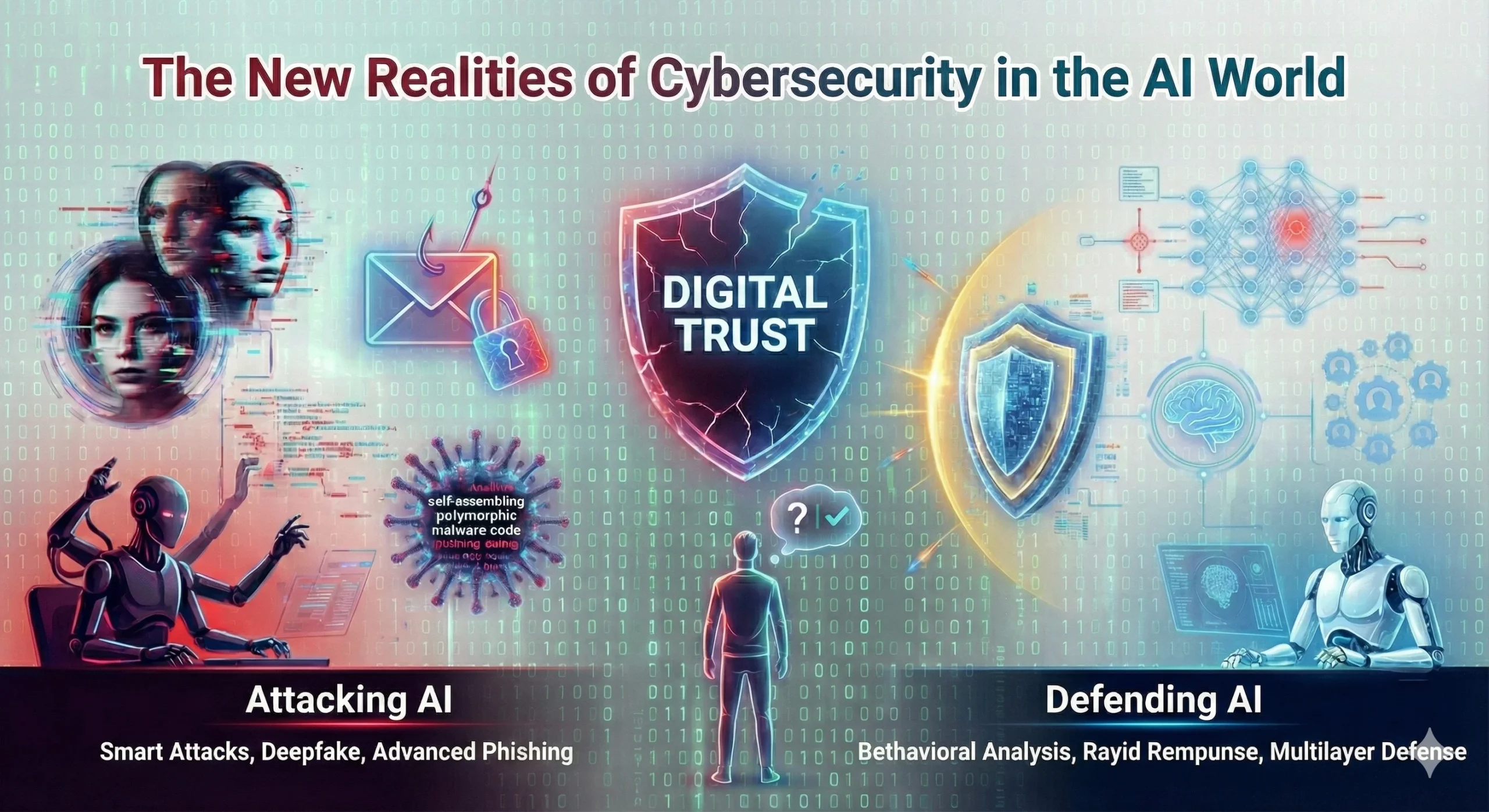

Cybersecurity is no longer just about viruses and antivirus software. The digital world has changed significantly, and the line between normal system behavior and a cyberattack is becoming increasingly blurred. One of the main reasons behind this shift is the serious entry of artificial intelligence into the cyber threat landscape and Attack with Artificial Intelligence. Tools that were once limited to large technology companies are now widely available, and attackers are using them to make cyberattacks faster, more precise, and harder to detect.

Until a few years ago, most cyberattacks required deep technical knowledge, manual effort, and a lot of time. Attackers had to write code, prepare phishing emails one by one, and carefully choose their targets. Today, artificial intelligence has automated many of these steps. Systems can collect information about victims, analyze behavior patterns, and even decide when and how to attack. As a result, the gap between simple attacks and advanced attacks has become much smaller.

One of the clearest examples of this change can be seen in phishing attacks. Traditional phishing emails were often easy to spot. They contained spelling mistakes, strange wording, generic messages, or suspicious requests. Modern phishing attacks, however, look very different. With the help of artificial intelligence, attackers can generate emails that sound natural, are free of errors, and closely match the recipient’s professional or personal context. When a message looks familiar and relevant, detecting the threat becomes much more difficult, especially during a busy workday.

This evolution is not limited to email. Social engineering has entered a new phase. Artificial intelligence allows attackers to adapt their language, tone, and timing to each individual. Systems can estimate who is under pressure, who is more likely to respond quickly, and which approach is most effective. As a result, attacks are no longer random. They are targeted, calculated, and carefully designed to exploit human behavior rather than technical weaknesses.

Another growing concern is deepfake technology. Artificial intelligence can now recreate realistic voices, images, and even videos of real people. What once seemed like a novelty has become a serious security risk. There have already been real cases where companies lost large amounts of money after receiving phone calls that appeared to come from senior executives. These attacks did not rely on malware or system vulnerabilities, but on trust. They demonstrate that seeing or hearing something is no longer enough to confirm its authenticity.

Malware has also evolved. Traditional malware followed predictable patterns and could often be detected using known signatures. New generations of malware behave very differently. They can adapt to their environment, change their structure, and delay their activity until conditions are favorable. Some malicious programs can even detect whether they are running inside a testing or sandbox environment and remain inactive until they reach a real system. This makes detection far more challenging and reduces the effectiveness of traditional security tools.

Artificial intelligence has also enabled large-scale attacks with minimal resources. Tasks that once required teams of attackers can now be performed automatically. Scanning thousands of systems, identifying vulnerabilities, and prioritizing targets can all be done with limited human involvement. This means that a single attacker, supported by automated tools, can cause damage on a scale that was previously difficult to achieve.

One of the biggest challenges for defenders is that many of these attacks closely resemble normal system activity. Network traffic may look legitimate, user behavior may appear normal, and system logs may not show obvious warning signs. As a result, attacks are often detected late, sometimes only after damage has already occurred. This delay increases the overall impact and makes recovery more difficult.

Contrary to popular belief, large organizations are not the only targets. Small and medium-sized businesses, startups, and teams with limited security awareness are often more vulnerable. Simpler systems, fewer controls, and slower response times make them attractive targets. In many cases, attackers do not need sophisticated techniques because basic security gaps are enough.

Overall, artificial intelligence has fundamentally changed the cyber threat landscape. Attacks have become more intelligent, more personalized, and more difficult to distinguish from normal activity. Trust in digital communication has become a weak point, and traditional security approaches are no longer sufficient on their own. Cybersecurity has entered a new phase, one that requires a deeper understanding of technology, human behavior, and the interaction between them.

Modern digital environments generate massive amounts of data every second. System logs, network traffic, user behavior, and access patterns quickly exceed what human analysts can manually review. Artificial intelligence plays a key role here by continuously analyzing this data and identifying patterns that would otherwise go unnoticed. Instead of relying on known attack signatures, modern security systems focus on detecting abnormal behavior, which allows them to identify both known and unknown threats.

One of the most important advantages of artificial intelligence in cybersecurity is faster detection and response. In many incidents, the damage caused by an attack depends largely on how quickly it is discovered. Intelligent security systems can raise alerts in real time, isolate suspicious activity, and limit access before a threat spreads. This reduction in response time gives security teams the opportunity to investigate incidents while they are still manageable.

Despite these advantages, technology alone is not enough. Relying entirely on automated systems can create a false sense of security. Even the most advanced tools require proper configuration, continuous monitoring, and regular updates. Without a clear understanding of the organization’s environment and risks, artificial intelligence systems may generate false alerts or miss subtle threats. Cybersecurity remains a process, not a product.

Human involvement continues to be a critical part of effective defense. Many successful attacks exploit human behavior rather than technical weaknesses. Employees who are unaware of phishing techniques, social engineering tactics, or identity impersonation are more likely to make mistakes that bypass technical controls. For this reason, security awareness training should not be limited to technical teams. Everyone who interacts with digital systems plays a role in maintaining security.

Clear policies and structured processes provide another essential layer of protection. Attacks that rely on urgency and pressure, such as deepfake-based fraud or executive impersonation, are far more effective when decisions are made quickly and without verification. Requiring multi-step approval for sensitive actions, verifying requests through multiple channels, and limiting user privileges can significantly reduce the success of these attacks.

Effective cybersecurity strategies are built on multiple layers of defense. This layered approach combines intelligent tools, human oversight, training, and well-defined procedures. Each layer may have weaknesses, but together they create a more resilient system. When one layer fails, others can still prevent or limit the impact of an attack.

Small and medium-sized organizations often believe that advanced cybersecurity is only achievable for large enterprises. In reality, these organizations are frequently targeted precisely because they have fewer defenses. Fortunately, cloud-based security solutions and scalable services have made advanced protection more accessible. Basic security practices, combined with awareness and planning, can significantly reduce risk without requiring large budgets.

Looking ahead, cybersecurity is likely to become an ongoing competition between artificial intelligence used for attacks and artificial intelligence used for defense. As attackers continue to refine their techniques, defensive systems will also evolve. Organizations that adapt early, invest in training, and treat security as a continuous effort will be better positioned to respond to future threats.

One of the most important challenges in the coming years will be maintaining trust in digital communication. When voices, images, and messages can be convincingly manipulated, blind trust becomes a liability. Verifying requests, slowing down decision-making in critical situations, and encouraging a culture of careful validation can prevent many successful attacks. In this environment, awareness and caution are just as valuable as advanced technology.

In conclusion, when hackers attack with artificial intelligence, defense must also become smarter. Artificial intelligence is not only a threat but also a powerful tool for protection. The difference lies in how it is used and how well it is integrated with human decision-making and organizational processes. Cybersecurity today is about balance: combining technology, people, and strategy to stay resilient in an increasingly complex digital world.